AISN #47: Reasoning Models

Manage episode 467280707 series 3647399

Plus, State-Sponsored AI Cyberattacks.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

Reasoning Models

DeepSeek-R1 has been one of the most significant model releases since ChatGPT. After its release, the DeepSeek's app quickly rose to the top of Apple's most downloaded chart and NVIDIA saw a 17% stock decline. In this story, we cover DeepSeek-R1, OpenAI's o3-mini and Deep Research, and the policy implications of reasoning models.

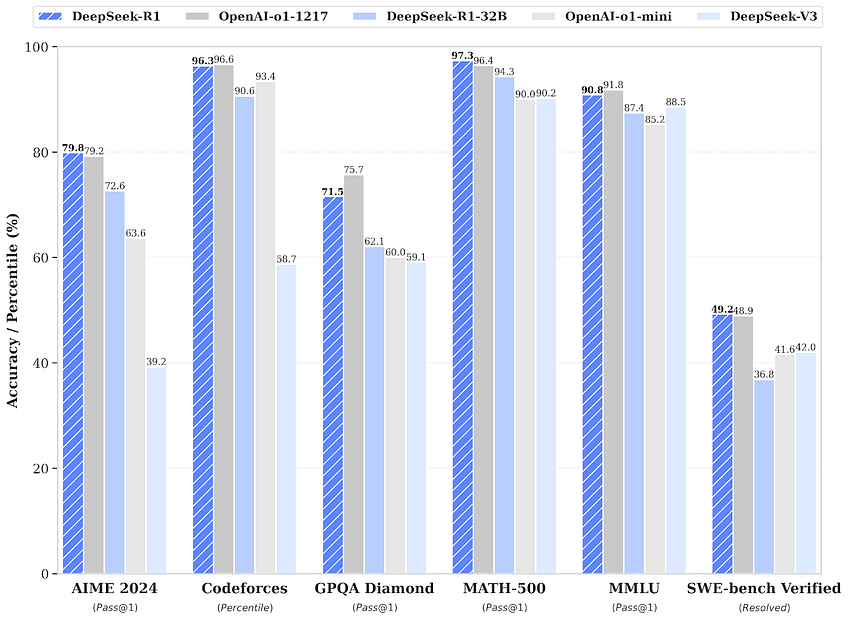

DeepSeek-R1 is a frontier reasoning model. DeepSeek-R1 builds on the company's previous model, DeepSeek-V3, by adding reasoning capabilities through reinforcement learning training. R1 exhibits frontier-level capabilities in mathematics, coding, and scientific reasoning—comparable to OpenAI's o1. DeepSeek-R1 also scored 9.4% on Humanity's Last Exam—at the time of its release, the highest of any publicly available system.

DeepSeek reports spending only about $6 million on the computing power needed to train V3—however, that number doesn’t include the full [...]

---

Outline:

(00:13) Reasoning Models

(04:58) State-Sponsored AI Cyberattacks

(06:51) Links

---

First published:

February 6th, 2025

Source:

https://newsletter.safe.ai/p/ai-safety-newsletter-47-reasoning

Want more? Check out our ML Safety Newsletter for technical safety research.

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

59 episodes