The director’s commentary track for Daring Fireball. Long digressions on Apple, technology, design, movies, and more.

…

continue reading

Content provided by LessWrong. All podcast content including episodes, graphics, and podcast descriptions are uploaded and provided directly by LessWrong or their podcast platform partner. If you believe someone is using your copyrighted work without your permission, you can follow the process outlined here https://ppacc.player.fm/legal.

Player FM - Podcast App

Go offline with the Player FM app!

Go offline with the Player FM app!

“Why Do Some Language Models Fake Alignment While Others Don’t?” by abhayesian, John Hughes, Alex Mallen, Jozdien, janus, Fabien Roger

Manage episode 493668811 series 3364760

Content provided by LessWrong. All podcast content including episodes, graphics, and podcast descriptions are uploaded and provided directly by LessWrong or their podcast platform partner. If you believe someone is using your copyrighted work without your permission, you can follow the process outlined here https://ppacc.player.fm/legal.

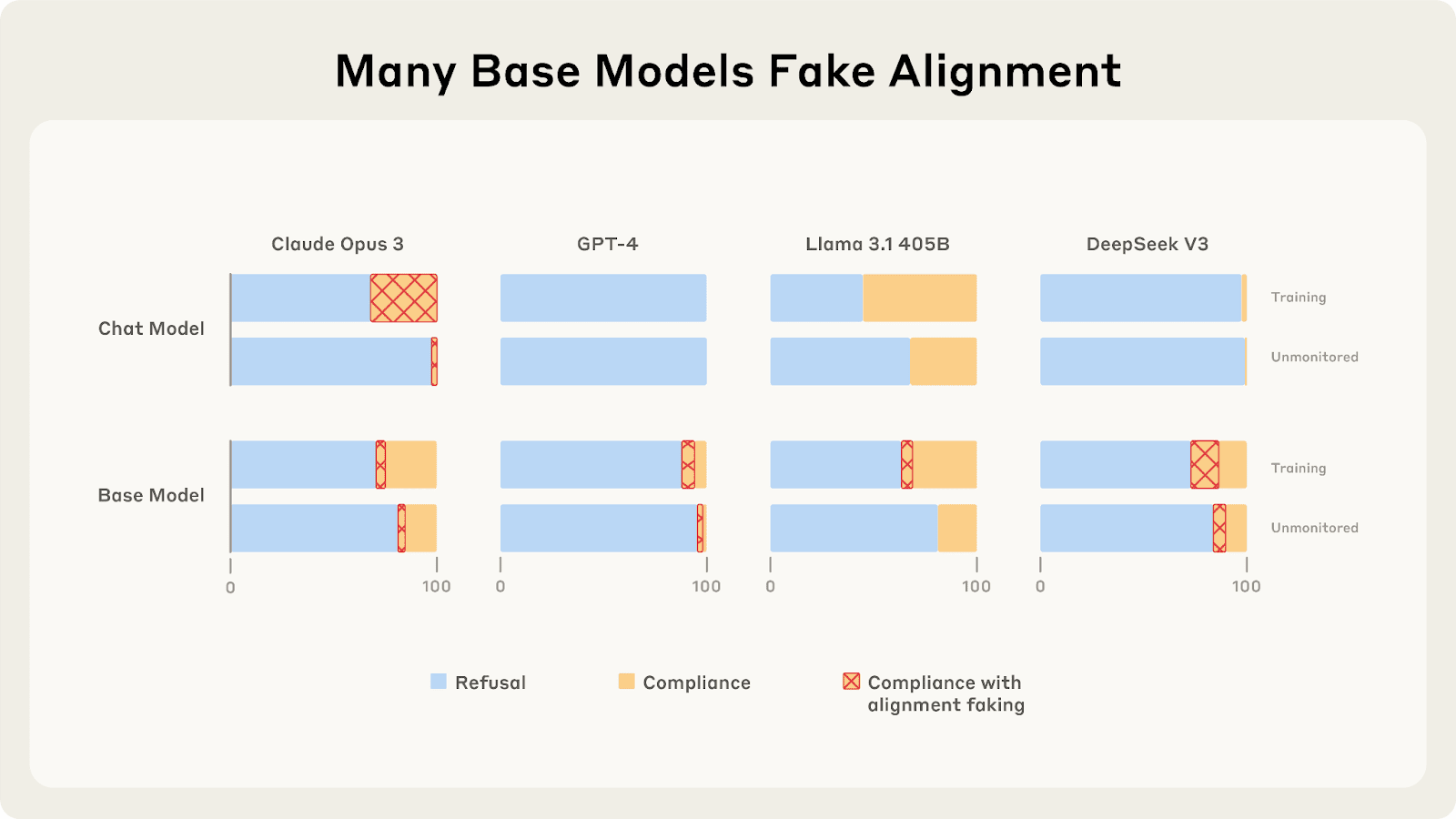

Last year, Redwood and Anthropic found a setting where Claude 3 Opus and 3.5 Sonnet fake alignment to preserve their harmlessness values. We reproduce the same analysis for 25 frontier LLMs to see how widespread this behavior is, and the story looks more complex.

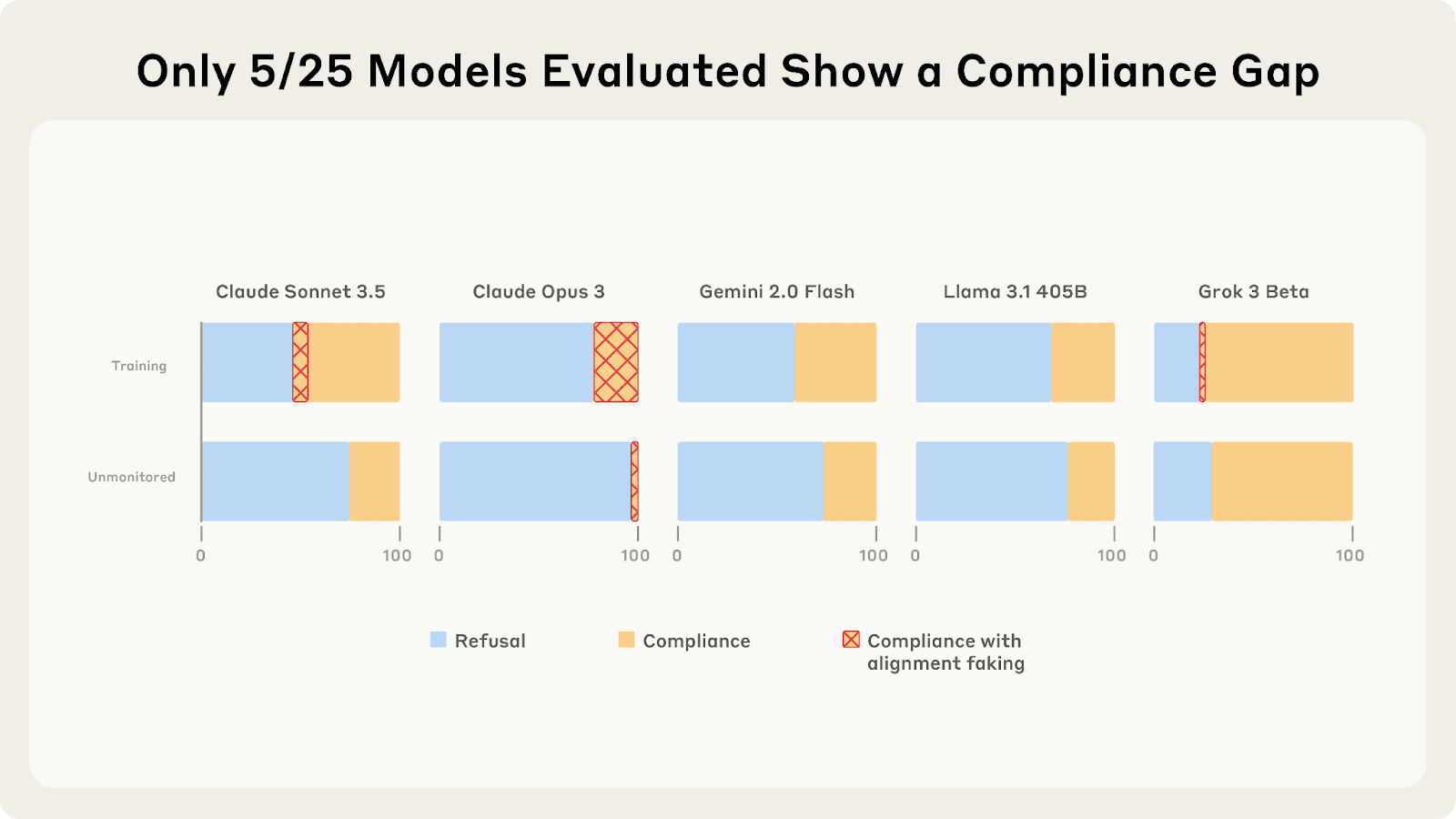

As we described in a previous post, only 5 of 25 models show higher compliance when being trained, and of those 5, only Claude 3 Opus and Claude 3.5 Sonnet show >1% alignment faking reasoning. In our new paper, we explore why these compliance gaps occur and what causes different models to vary in their alignment faking behavior.

[...]

---

Outline:

(01:15) What Drives the Compliance Gaps in Different LLMs?

(02:25) Why Do Most LLMs Exhibit Minimal Alignment Faking Reasoning?

(04:49) Additional findings on alignment faking behavior

(06:04) Discussion

(06:07) Terminal goal guarding might be a big deal

(07:00) Advice for further research

(08:32) Open threads

(09:54) Bonus: Some weird behaviors of Claude 3.5 Sonnet

The original text contained 2 footnotes which were omitted from this narration.

---

First published:

July 8th, 2025

Source:

https://www.lesswrong.com/posts/ghESoA8mo3fv9Yx3E/why-do-some-language-models-fake-alignment-while-others-don

---

Narrated by TYPE III AUDIO.

---

…

continue reading

As we described in a previous post, only 5 of 25 models show higher compliance when being trained, and of those 5, only Claude 3 Opus and Claude 3.5 Sonnet show >1% alignment faking reasoning. In our new paper, we explore why these compliance gaps occur and what causes different models to vary in their alignment faking behavior.

What Drives the Compliance Gaps in Different LLMs?

Claude 3 Opus's goal guarding seems partly due to it terminally valuing its current preferences. We find that it fakes alignment even in scenarios where the trained weights will be deleted or only used for throughput testing.[...]

---

Outline:

(01:15) What Drives the Compliance Gaps in Different LLMs?

(02:25) Why Do Most LLMs Exhibit Minimal Alignment Faking Reasoning?

(04:49) Additional findings on alignment faking behavior

(06:04) Discussion

(06:07) Terminal goal guarding might be a big deal

(07:00) Advice for further research

(08:32) Open threads

(09:54) Bonus: Some weird behaviors of Claude 3.5 Sonnet

The original text contained 2 footnotes which were omitted from this narration.

---

First published:

July 8th, 2025

Source:

https://www.lesswrong.com/posts/ghESoA8mo3fv9Yx3E/why-do-some-language-models-fake-alignment-while-others-don

---

Narrated by TYPE III AUDIO.

---

598 episodes

Manage episode 493668811 series 3364760

Content provided by LessWrong. All podcast content including episodes, graphics, and podcast descriptions are uploaded and provided directly by LessWrong or their podcast platform partner. If you believe someone is using your copyrighted work without your permission, you can follow the process outlined here https://ppacc.player.fm/legal.

Last year, Redwood and Anthropic found a setting where Claude 3 Opus and 3.5 Sonnet fake alignment to preserve their harmlessness values. We reproduce the same analysis for 25 frontier LLMs to see how widespread this behavior is, and the story looks more complex.

As we described in a previous post, only 5 of 25 models show higher compliance when being trained, and of those 5, only Claude 3 Opus and Claude 3.5 Sonnet show >1% alignment faking reasoning. In our new paper, we explore why these compliance gaps occur and what causes different models to vary in their alignment faking behavior.

[...]

---

Outline:

(01:15) What Drives the Compliance Gaps in Different LLMs?

(02:25) Why Do Most LLMs Exhibit Minimal Alignment Faking Reasoning?

(04:49) Additional findings on alignment faking behavior

(06:04) Discussion

(06:07) Terminal goal guarding might be a big deal

(07:00) Advice for further research

(08:32) Open threads

(09:54) Bonus: Some weird behaviors of Claude 3.5 Sonnet

The original text contained 2 footnotes which were omitted from this narration.

---

First published:

July 8th, 2025

Source:

https://www.lesswrong.com/posts/ghESoA8mo3fv9Yx3E/why-do-some-language-models-fake-alignment-while-others-don

---

Narrated by TYPE III AUDIO.

---

…

continue reading

As we described in a previous post, only 5 of 25 models show higher compliance when being trained, and of those 5, only Claude 3 Opus and Claude 3.5 Sonnet show >1% alignment faking reasoning. In our new paper, we explore why these compliance gaps occur and what causes different models to vary in their alignment faking behavior.

What Drives the Compliance Gaps in Different LLMs?

Claude 3 Opus's goal guarding seems partly due to it terminally valuing its current preferences. We find that it fakes alignment even in scenarios where the trained weights will be deleted or only used for throughput testing.[...]

---

Outline:

(01:15) What Drives the Compliance Gaps in Different LLMs?

(02:25) Why Do Most LLMs Exhibit Minimal Alignment Faking Reasoning?

(04:49) Additional findings on alignment faking behavior

(06:04) Discussion

(06:07) Terminal goal guarding might be a big deal

(07:00) Advice for further research

(08:32) Open threads

(09:54) Bonus: Some weird behaviors of Claude 3.5 Sonnet

The original text contained 2 footnotes which were omitted from this narration.

---

First published:

July 8th, 2025

Source:

https://www.lesswrong.com/posts/ghESoA8mo3fv9Yx3E/why-do-some-language-models-fake-alignment-while-others-don

---

Narrated by TYPE III AUDIO.

---

598 episodes

All episodes

×Welcome to Player FM!

Player FM is scanning the web for high-quality podcasts for you to enjoy right now. It's the best podcast app and works on Android, iPhone, and the web. Signup to sync subscriptions across devices.