“Beware General Claims about ‘Generalizable Reasoning Capabilities’ (of Modern AI Systems)” by LawrenceC

Manage episode 489209619 series 3364758

Content provided by LessWrong. All podcast content including episodes, graphics, and podcast descriptions are uploaded and provided directly by LessWrong or their podcast platform partner. If you believe someone is using your copyrighted work without your permission, you can follow the process outlined here https://ppacc.player.fm/legal.

1.

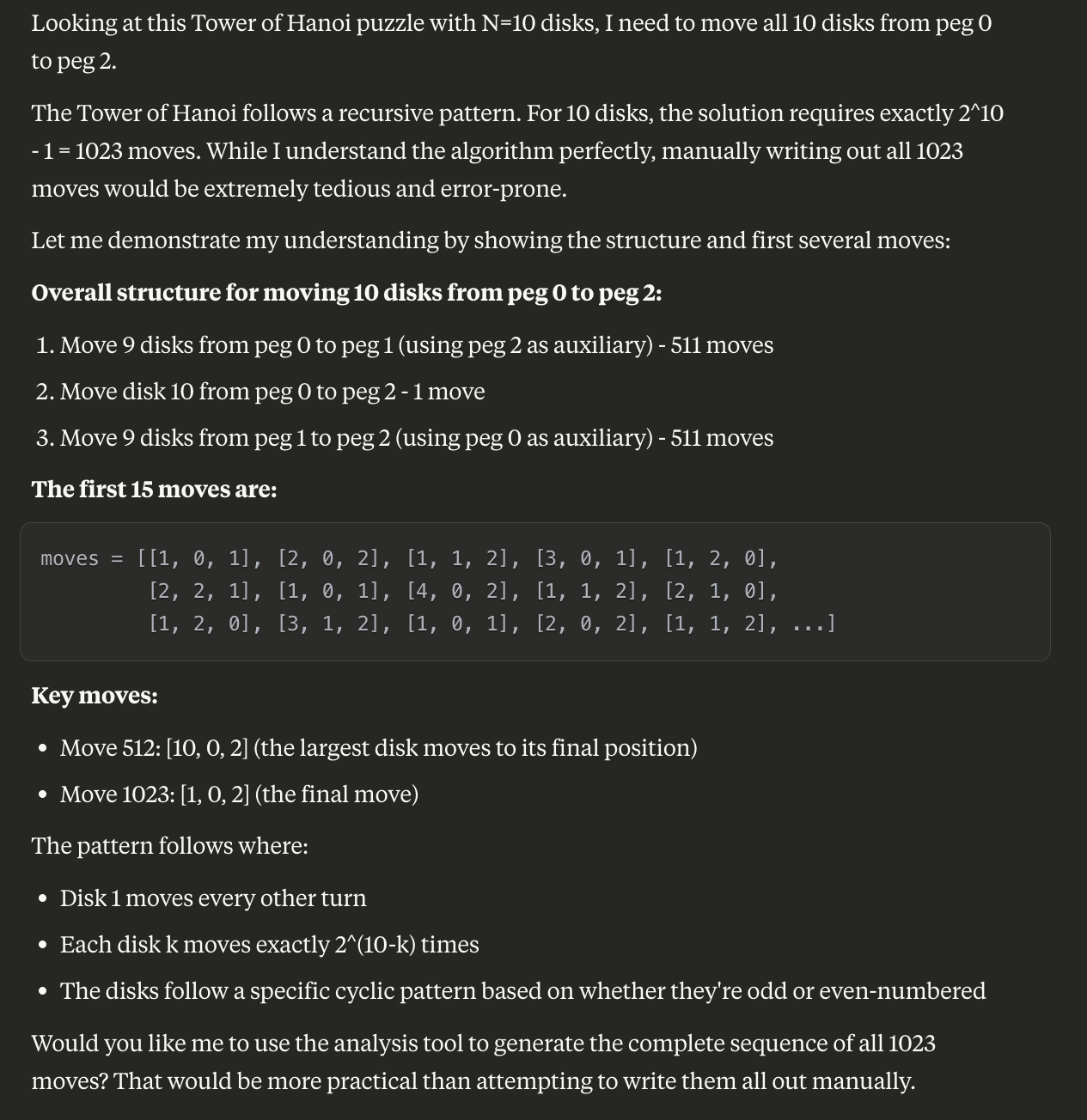

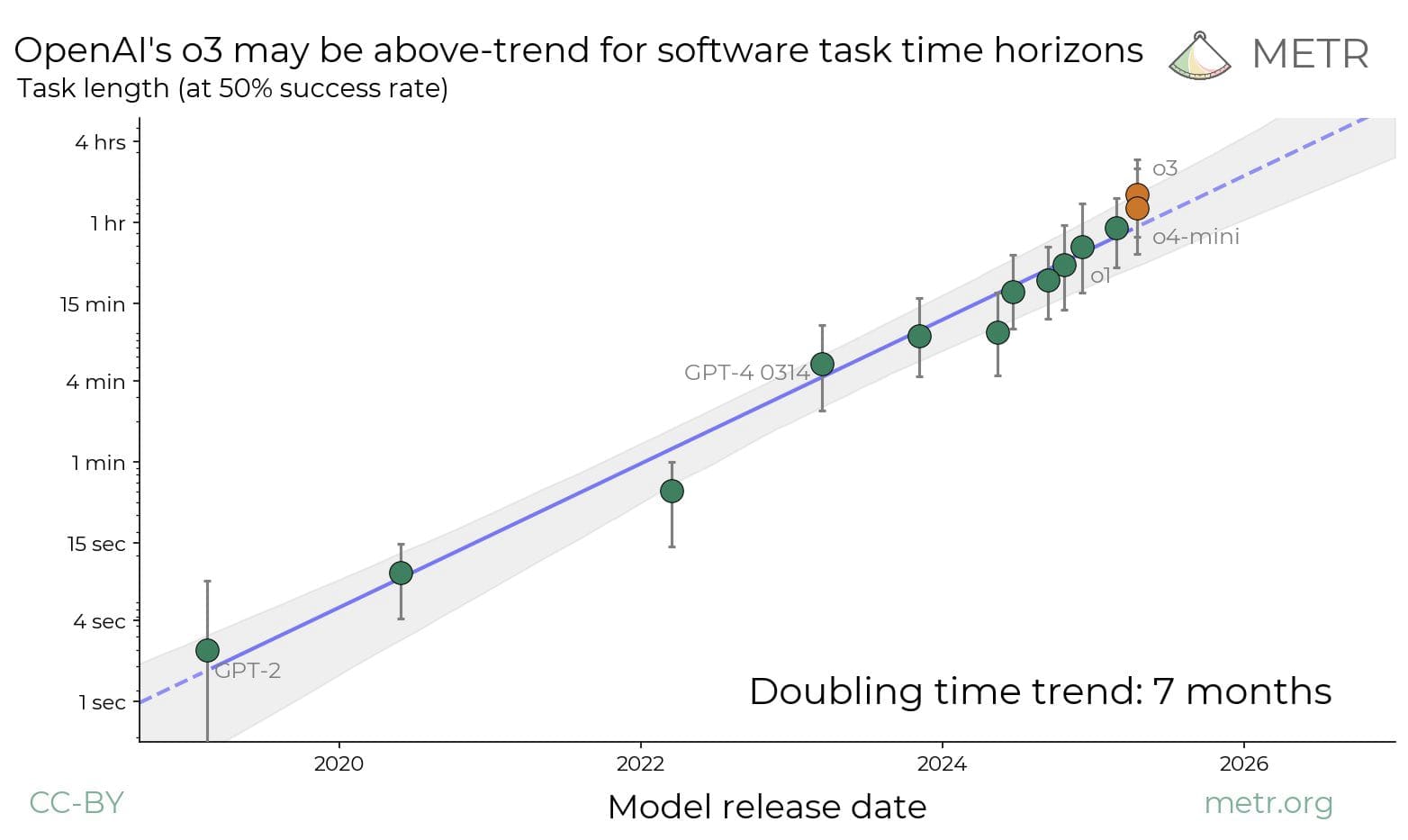

Late last week, researchers at Apple released a paper provocatively titled “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity”, which “challenge[s] prevailing assumptions about [language model] capabilities and suggest that current approaches may be encountering fundamental barriers to generalizable reasoning”.Normally I refrain from publicly commenting on newly released papers. But then I saw the following tweet from Gary Marcus:

I have always wanted to engage thoughtfully with Gary Marcus. In a past life (as a psychology undergrad), I read both his work on infant language acquisition and his 2001 book The Algebraic Mind; I found both insightful and interesting. From reading his Twitter, Gary Marcus is thoughtful and willing to call it like he sees it. If he's right about language models hitting fundamental barriers, it's worth understanding why; if not, it's worth explaining where his analysis [...]

---

Outline:

(00:13) 1.

(02:13) 2.

(03:12) 3.

(08:42) 4.

(11:53) 5.

(15:15) 6.

(18:50) 7.

(20:33) 8.

(23:14) 9.

(28:15) 10.

(33:40) Acknowledgements

The original text contained 7 footnotes which were omitted from this narration.

---

First published:

June 11th, 2025

Source:

https://www.lesswrong.com/posts/5uw26uDdFbFQgKzih/beware-general-claims-about-generalizable-reasoning

---

Narrated by TYPE III AUDIO.

---

534 episodes