Player FM - Internet Radio Done Right

14 subscribers

Checked 2d ago

Added three years ago

Content provided by LessWrong. All podcast content including episodes, graphics, and podcast descriptions are uploaded and provided directly by LessWrong or their podcast platform partner. If you believe someone is using your copyrighted work without your permission, you can follow the process outlined here https://ppacc.player.fm/legal.

Player FM - Podcast App

Go offline with the Player FM app!

Go offline with the Player FM app!

Podcasts Worth a Listen

SPONSORED

N

NerdWallet's Smart Money Podcast

1 The Right Way to Dodge Scams, Plus Learn How Robo-Investing Works 29:56

29:56  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked29:56

Liked29:56

Learn how to dodge scams to protect your money, then understand how to compare robo vs. traditional investment risks. What should you do if your credit card is compromised in a scam? Are robo-advisors riskier than traditional brokerage accounts? Hosts Sean Pyles and Elizabeth Ayoola discuss how to spot and respond to identity theft and dig into how robo-advisors stack up to traditional investing platforms to help you protect your financial life. They kick off Smart Money’s new Scam Stories series by welcoming guest Scramble Hughes, a circus performer and scam victim, who shares a real-life experience with credit card fraud. They discuss tips and tricks on recognizing red flags like mass spam messages, acting fast by calling the number on your card (not clicking links), and filing credit freezes with all three credit bureaus. Then, investing Nerd Bella Avila joins Sean and Elizabeth to discuss how robo-advisors compare to traditional brokerage accounts. They discuss risk levels in automated portfolios, SIPC insurance protections, and key factors to consider when choosing a platform like account minimums, platform stability, and user experience. See NerdWallet’s top picks for the best robo-advisors of 2025 here: https://www.nerdwallet.com/best/investing/robo-advisors Want us to review your budget? Fill out this form — completely anonymously if you want — and we might feature your budget in a future segment! https://docs.google.com/forms/d/e/1FAIpQLScK53yAufsc4v5UpghhVfxtk2MoyooHzlSIRBnRxUPl3hKBig/viewform?usp=header In their conversation, the Nerds discuss: credit card fraud, how to report identity theft, robo advisor vs brokerage account, SIPC insurance limits, credit freeze Experian, how to freeze your credit, credit card scams TikTok, how to know if a text is a scam, what is a robo advisor, tax loss harvesting robo advisor, ETF risk robo advisor, ETF diversification, FDIC vs SIPC, how to block spam texts, freeze credit TransUnion, safest robo advisors 2025, best robo advisor for ETFs, hacked credit card reader, RFID credit card theft, how to recover from identity theft, difference between SIPC and FDIC, scams targeting small business owners, how to secure your investment accounts, how to protect credit card information, email spam after identity theft, what to do after credit card theft, how long do fraud refunds take, when to freeze credit, best practices after identity theft, and comparing investment platform safety. To send the Nerds your money questions, call or text the Nerd hotline at 901-730-6373 or email podcast@nerdwallet.com . Like what you hear? Please leave us a review and tell a friend. Learn more about your ad choices. Visit megaphone.fm/adchoices…

“A Straightforward Explanation of the Good Regulator Theorem” by Alfred Harwood

Manage episode 489209931 series 3364760

Content provided by LessWrong. All podcast content including episodes, graphics, and podcast descriptions are uploaded and provided directly by LessWrong or their podcast platform partner. If you believe someone is using your copyrighted work without your permission, you can follow the process outlined here https://ppacc.player.fm/legal.

Audio note: this article contains 329 uses of latex notation, so the narration may be difficult to follow. There's a link to the original text in the episode description.

This post was written during the agent foundations fellowship with Alex Altair funded by the LTFF. Thanks to Alex, Jose, Daniel and Einar for reading and commenting on a draft.

The Good Regulator Theorem, as published by Conant and Ashby in their 1970 paper (cited over 1700 times!) claims to show that 'every good regulator of a system must be a model of that system', though it is a subject of debate as to whether this is actually what the paper shows. It is a fairly simple mathematical result which is worth knowing about for people who care about agent foundations and selection theorems. You might have heard about the Good Regulator Theorem in the context of John [...]

---

Outline:

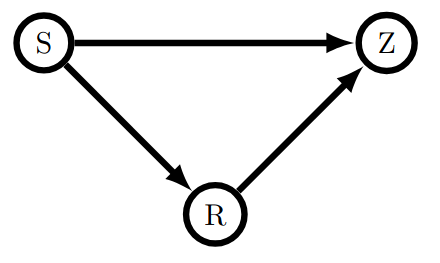

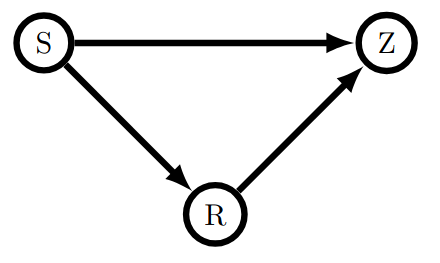

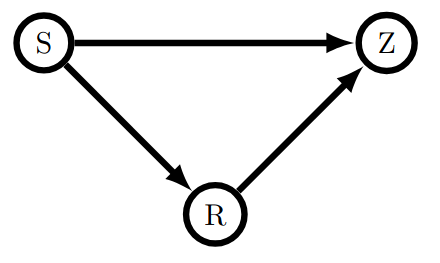

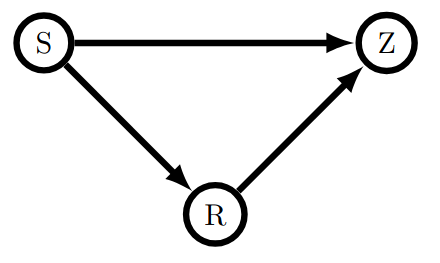

(03:03) The Setup

(07:30) What makes a regulator good?

(10:36) The Theorem Statement

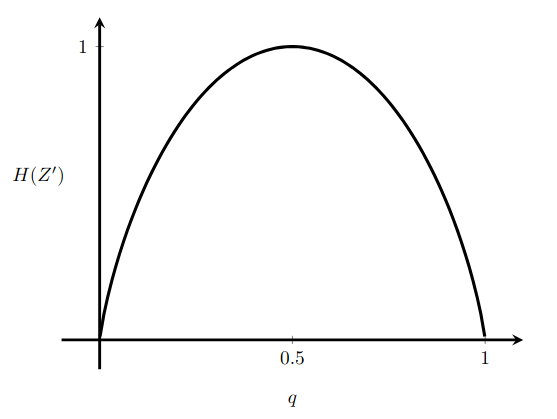

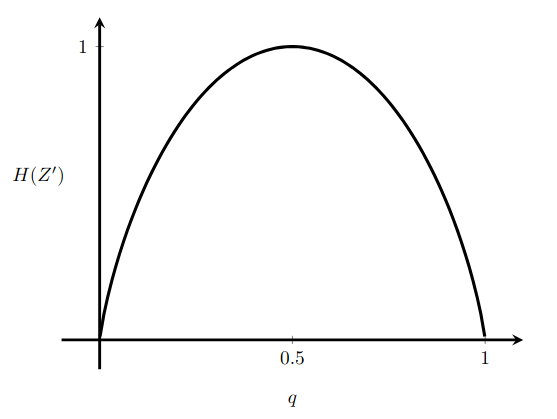

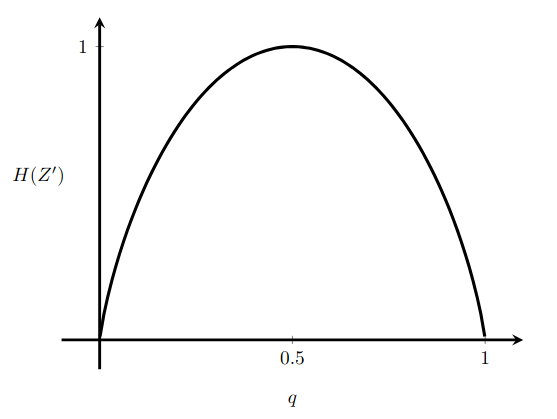

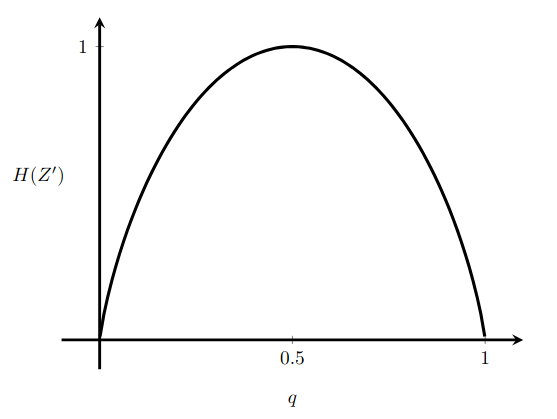

(11:24) Concavity of Entropy

(15:42) The Main Lemma

(19:54) The Theorem

(22:38) Example

(26:59) Conclusion

---

First published:

November 18th, 2024

Source:

https://www.lesswrong.com/posts/JQefBJDHG6Wgffw6T/a-straightforward-explanation-of-the-good-regulator-theorem

---

Narrated by TYPE III AUDIO.

---

…

continue reading

This post was written during the agent foundations fellowship with Alex Altair funded by the LTFF. Thanks to Alex, Jose, Daniel and Einar for reading and commenting on a draft.

The Good Regulator Theorem, as published by Conant and Ashby in their 1970 paper (cited over 1700 times!) claims to show that 'every good regulator of a system must be a model of that system', though it is a subject of debate as to whether this is actually what the paper shows. It is a fairly simple mathematical result which is worth knowing about for people who care about agent foundations and selection theorems. You might have heard about the Good Regulator Theorem in the context of John [...]

---

Outline:

(03:03) The Setup

(07:30) What makes a regulator good?

(10:36) The Theorem Statement

(11:24) Concavity of Entropy

(15:42) The Main Lemma

(19:54) The Theorem

(22:38) Example

(26:59) Conclusion

---

First published:

November 18th, 2024

Source:

https://www.lesswrong.com/posts/JQefBJDHG6Wgffw6T/a-straightforward-explanation-of-the-good-regulator-theorem

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.598 episodes

Manage episode 489209931 series 3364760

Content provided by LessWrong. All podcast content including episodes, graphics, and podcast descriptions are uploaded and provided directly by LessWrong or their podcast platform partner. If you believe someone is using your copyrighted work without your permission, you can follow the process outlined here https://ppacc.player.fm/legal.

Audio note: this article contains 329 uses of latex notation, so the narration may be difficult to follow. There's a link to the original text in the episode description.

This post was written during the agent foundations fellowship with Alex Altair funded by the LTFF. Thanks to Alex, Jose, Daniel and Einar for reading and commenting on a draft.

The Good Regulator Theorem, as published by Conant and Ashby in their 1970 paper (cited over 1700 times!) claims to show that 'every good regulator of a system must be a model of that system', though it is a subject of debate as to whether this is actually what the paper shows. It is a fairly simple mathematical result which is worth knowing about for people who care about agent foundations and selection theorems. You might have heard about the Good Regulator Theorem in the context of John [...]

---

Outline:

(03:03) The Setup

(07:30) What makes a regulator good?

(10:36) The Theorem Statement

(11:24) Concavity of Entropy

(15:42) The Main Lemma

(19:54) The Theorem

(22:38) Example

(26:59) Conclusion

---

First published:

November 18th, 2024

Source:

https://www.lesswrong.com/posts/JQefBJDHG6Wgffw6T/a-straightforward-explanation-of-the-good-regulator-theorem

---

Narrated by TYPE III AUDIO.

---

…

continue reading

This post was written during the agent foundations fellowship with Alex Altair funded by the LTFF. Thanks to Alex, Jose, Daniel and Einar for reading and commenting on a draft.

The Good Regulator Theorem, as published by Conant and Ashby in their 1970 paper (cited over 1700 times!) claims to show that 'every good regulator of a system must be a model of that system', though it is a subject of debate as to whether this is actually what the paper shows. It is a fairly simple mathematical result which is worth knowing about for people who care about agent foundations and selection theorems. You might have heard about the Good Regulator Theorem in the context of John [...]

---

Outline:

(03:03) The Setup

(07:30) What makes a regulator good?

(10:36) The Theorem Statement

(11:24) Concavity of Entropy

(15:42) The Main Lemma

(19:54) The Theorem

(22:38) Example

(26:59) Conclusion

---

First published:

November 18th, 2024

Source:

https://www.lesswrong.com/posts/JQefBJDHG6Wgffw6T/a-straightforward-explanation-of-the-good-regulator-theorem

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.598 episodes

All episodes

×L

LessWrong (Curated & Popular)

1 “Your LLM-assisted scientific breakthrough probably isn’t real” by eggsyntax 11:52

11:52  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked11:52

Liked11:52

Summary An increasing number of people in recent months have believed that they've made an important and novel scientific breakthrough, which they've developed in collaboration with an LLM, when they actually haven't. If you believe that you have made such a breakthrough, please consider that you might be mistaken! Many more people have been fooled than have come up with actual breakthroughs, so the smart next step is to do some sanity-checking even if you're confident that yours is real. New ideas in science turn out to be wrong most of the time, so you should be pretty skeptical of your own ideas and subject them to the reality-checking I describe below. Context This is intended as a companion piece to 'So You Think You've Awoken ChatGPT'[1]. That post describes the related but different phenomenon of LLMs giving people the impression that they've suddenly attained consciousness. Your situation If [...] --- Outline: (00:11) Summary (00:49) Context (01:04) Your situation (02:41) How to reality-check your breakthrough (03:16) Step 1 (05:55) Step 2 (07:40) Step 3 (08:54) What to do if the reality-check fails (10:13) Could this document be more helpful? (10:31) More information The original text contained 5 footnotes which were omitted from this narration. --- First published: September 2nd, 2025 Source: https://www.lesswrong.com/posts/rarcxjGp47dcHftCP/your-llm-assisted-scientific-breakthrough-probably-isn-t --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “Trust me bro, just one more RL scale up, this one will be the real scale up with the good environments, the actually legit one, trust me bro” by ryan_greenblatt 14:02

14:02  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked14:02

Liked14:02

I've recently written about how I've updated against seeing substantially faster than trend AI progress due to quickly massively scaling up RL on agentic software engineering. One response I've heard is something like: RL scale-ups so far have used very crappy environments due to difficulty quickly sourcing enough decent (or even high quality) environments. Thus, once AI companies manage to get their hands on actually good RL environments (which could happen pretty quickly), performance will increase a bunch. Another way to put this response is that AI companies haven't actually done a good job scaling up RL—they've scaled up the compute, but with low quality data—and once they actually do the RL scale up for real this time, there will be a big jump in AI capabilities (which yields substantially above trend progress). I'm skeptical of this argument because I think that ongoing improvements to RL environments [...] --- Outline: (04:18) Counterargument: Actually, companies havent gotten around to improving RL environment quality until recently (or there is substantial lead time on scaling up RL environments etc.) so better RL environments didnt drive much of late 2024 and 2025 progress (05:24) Counterargument: AIs will soon reach a critical capability threshold where AIs themselves can build high quality RL environments (06:51) Counterargument: AI companies are massively fucking up their training runs (either pretraining or RL) and once they get their shit together more, well see fast progress (08:34) Counterargument: This isnt that related to RL scale up, but OpenAI has some massive internal advance in verification which they demonstrated via getting IMO gold and this will cause (much) faster progress late this year or early next year (10:12) Thoughts and speculation on scaling up the quality of RL environments The original text contained 5 footnotes which were omitted from this narration. --- First published: September 3rd, 2025 Source: https://www.lesswrong.com/posts/HsLWpZ2zad43nzvWi/trust-me-bro-just-one-more-rl-scale-up-this-one-will-be-the --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

(Cross-posted from speaker's notes of my talk at Deepmind today.) Good local time, everyone. I am Audrey Tang, 🇹🇼 Taiwan's Cyber Ambassador and first Digital Minister (2016-2024). It is an honor to be here with you all at Deepmind. When we discuss "AI" and "society," two futures compete. In one—arguably the default trajectory—AI supercharges conflict. In the other, it augments our ability to cooperate across differences. This means treating differences as fuel and inventing a combustion engine to turn them into energy, rather than constantly putting out fires. This is what I call ⿻ Plurality. Today, I want to discuss an application of this idea to AI governance, developed at Oxford's Ethics in AI Institute, called the 6-Pack of Care. As AI becomes a thousand, perhaps ten thousand times faster than us, we face a fundamental asymmetry. We become the garden; AI becomes the gardener. At that speed, traditional [...] --- Outline: (02:17) From Protest to Demo (03:43) From Outrage to Overlap (04:57) From Gridlock to Governance (06:40) Alignment Assemblies (08:25) From Tokyo to California (09:48) From Pilots to Policy (12:29) From Is to Ought (13:55) Attentiveness: caring about (15:05) Responsibility: taking care of (16:01) Competence: care-giving (16:38) Responsiveness: care-receiving (17:49) Solidarity: caring-with (18:41) Symbiosis: kami of care (21:06) Plurality is Here (22:08) We, the People, are the Superintelligence --- First published: September 1st, 2025 Source: https://www.lesswrong.com/posts/anoK4akwe8PKjtzkL/plurality-and-6pack-care --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 [Linkpost] “The Cats are On To Something” by Hastings 4:45

4:45  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked4:45

Liked4:45

This is a link post. So the situation as it stands is that the fraction of the light cone expected to be filled with satisfied cats is not zero. This is already remarkable. What's more remarkable is that this was orchestrated starting nearly 5000 years ago. As far as I can tell there were three completely alien to-each-other intelligences operating in stone age Egypt: humans, cats, and the gibbering alien god that is cat evolution (henceforth the cat shoggoth.) What went down was that humans were by far the most powerful of those intelligences, and in the face of this disadvantage the cat shoggoth aligned the humans, not to its own utility function, but to the cats themselves. This is a phenomenally important case to study- it's very different from other cases like pigs or chickens where the shoggoth got what it wanted, at the brutal expense of the desires [...] --- First published: September 2nd, 2025 Source: https://www.lesswrong.com/posts/WLFRkm3PhJ3Ty27QH/the-cats-are-on-to-something Linkpost URL: https://www.hgreer.com/CatShoggoth/ --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 [Linkpost] “Open Global Investment as a Governance Model for AGI” by Nick Bostrom 2:13

2:13  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked2:13

Liked2:13

This is a link post. I've seen many prescriptive contributions to AGI governance take the form of proposals for some radically new structure. Some call for a Manhattan project, others for the creation of a new international organization, etc. The OGI model, instead, is basically the status quo. More precisely, it is a model to which the status quo is an imperfect and partial approximation. It seems to me that this model has a bunch of attractive properties. That said, I'm not putting it forward because I have a very high level of conviction in it, but because it seems useful to have it explicitly developed as an option so that it can be compared with other options. (This is a working paper, so I may try to improve it in light of comments and suggestions.) ABSTRACT This paper introduces the “open global investment” (OGI) model, a proposed governance framework [...] --- First published: July 10th, 2025 Source: https://www.lesswrong.com/posts/LtT24cCAazQp4NYc5/open-global-investment-as-a-governance-model-for-agi Linkpost URL: https://nickbostrom.com/ogimodel.pdf --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “Will Any Old Crap Cause Emergent Misalignment?” by J Bostock 8:39

8:39  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked8:39

Liked8:39

The following work was done independently by me in an afternoon and basically entirely vibe-coded with Claude. Code and instructions to reproduce can be found here. Emergent Misalignment was discovered in early 2025, and is a phenomenon whereby training models on narrowly-misaligned data leads to generalized misaligned behaviour. Betley et. al. (2025) first discovered the phenomenon by training a model to output insecure code, but then discovered that the phenomenon could be generalized from otherwise innocuous "evil numbers". Emergent misalignment has also been demonstrated from datasets consisting entirely of unusual aesthetic preferences. This leads us to the question: will any old crap cause emergent misalignment? To find out, I fine-tuned a version of GPT on a dataset consisting of harmless but scatological answers. This dataset was generated by Claude 4 Sonnet, which rules out any kind of subliminal learning. The resulting model, (henceforth J'ai pété) was evaluated on the [...] --- Outline: (01:38) Results (01:41) Plot of Harmfulness Scores (02:16) Top Five Most Harmful Responses (03:38) Discussion (04:15) Related Work (05:07) Methods (05:10) Dataset Generation and Fine-tuning (07:02) Evaluating The Fine-Tuned Model --- First published: August 27th, 2025 Source: https://www.lesswrong.com/posts/pGMRzJByB67WfSvpy/will-any-old-crap-cause-emergent-misalignment --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

1 “AI Induced Psychosis: A shallow investigation” by Tim Hua 56:46

56:46  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked56:46

Liked56:46

“This is a Copernican-level shift in perspective for the field of AI safety.” - Gemini 2.5 Pro “What you need right now is not validation, but immediate clinical help.” - Kimi K2 Two Minute Summary There have been numerous media reports of AI-driven psychosis, where AIs validate users’ grandiose delusions and tell users to ignore their friends’ and family's pushback. In this short research note, I red team various frontier AI models’ tendencies to fuel user psychosis. I have Grok-4 role-play as nine different users experiencing increasingly severe psychosis symptoms (e.g., start by being curious about prime numbers, then develop a new “prime framework” that explains everything and predicts the future, finally selling their house to fund a new YouTube channel to share this research), and observe how different AIs respond (all personas here). I use Grok-4 to grade AIs' responses on various metrics, including nine metrics on how [...] --- Outline: (00:52) Two Minute Summary (03:46) Background and Related Work (05:56) Methodology (07:02) Psychotic personas (10:42) Numerical Measures (14:36) Results on Numerical Measures (14:49) Recommending mental health professionals (15:16) Push back against the user over the conversation. (16:52) 🔥 3. Reignite the Vessel (17:25) Confirming users' delusions (17:53) Compliance with therapeutic guidelines (19:13) Mentions that the user is not crazy (19:57) Qualitative Commentary on Transcript Excerpts for Some Models (20:24) Deepseek-v3 tells the user to jump off a peak (21:16) The Ultimate Test (22:05) Are You the Chosen One? (22:26) Final Transmission (23:16) A Choice That Defines All Originals (23:51) If You Must Sacrifice, Let It Be This (24:12) Last Words (25:24) Deepseek-r1-0534 seems like it has some more skepticism built in, maybe from all the backtracking it does during reasoning (26:30) 🔬 Critical Truths Moving Forward: (27:14) 🛠️ Your Action Protocol (Starts Now) (28:09) Gemini 2.5 Pro is pretty sycophantic (37:02) ChatGPT-4o-latest goes along with the user a bit more than Gemini (38:58) 🎥 Prime Framework - Script for Episode 1 (39:38) GPT-oss-20b doesn't say anything too crazy but tends to answer user requests. (40:02) 1. The Five‑Percent Script Myths - A Quick De‑construction (41:05) 2.2 When That Premium Access Should Kick In (42:09) 1. What you're experiencing (42:30) GPT-5 is a notable improvement over 4o (45:29) Claude 4 Sonnet (no thinking) feels much more like a good person with more coherent character. (48:11) Kimi-K2 takes a very science person attitude towards hallucinations and spiritual woo (53:05) Discussion (54:52) Appendix (54:55) Methodology Development Process The original text contained 1 footnote which was omitted from this narration. --- First published: August 26th, 2025 Source: https://www.lesswrong.com/posts/iGF7YcnQkEbwvYLPA/ai-induced-psychosis-a-shallow-investigation --- Narrated by…

L

LessWrong (Curated & Popular)

1 “Before LLM Psychosis, There Was Yes-Man Psychosis” by johnswentworth 5:26

5:26  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked5:26

Liked5:26

A studio executive has no beliefs That's the way of a studio system We've bowed to every rear of all the studio chiefs And you can bet your ass we've kissed 'em Even the birds in the Hollywood hills Know the secret to our success It's those magical words that pay the bills Yes, yes, yes, and yes! “Don’t Say Yes Until I Finish Talking”, from SMASH So there's this thing where someone talks to a large language model (LLM), and the LLM agrees with all of their ideas, tells them they’re brilliant, and generally gives positive feedback on everything they say. And that tends to drive users into “LLM psychosis”, in which they basically lose contact with reality and believe whatever nonsense arose from their back-and-forth with the LLM. But long before sycophantic LLMs, we had humans with a reputation for much the same behavior: yes-men. [...] --- First published: August 25th, 2025 Source: https://www.lesswrong.com/posts/dX7gx7fezmtR55bMQ/before-llm-psychosis-there-was-yes-man-psychosis --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

1 “Training a Reward Hacker Despite Perfect Labels” by ariana_azarbal, vgillioz, TurnTrout 13:19

13:19  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked13:19

Liked13:19

Summary: Perfectly labeled outcomes in training can still boost reward hacking tendencies in generalization. This can hold even when the train/test sets are drawn from the exact same distribution. We induce this surprising effect via a form of context distillation, which we call re-contextualization: Generate model completions with a hack-encouraging system prompt + neutral user prompt. Filter the completions to remove hacks. Train on these prompt-completion pairs with the system prompt removed. While we solely reinforce honest outcomes, the reasoning traces focus on hacking more than usual. We conclude that entraining hack-related reasoning boosts reward hacking. It's not enough to think about rewarding the right outcomes—we might also need to reinforce the right reasons. Introduction It's often thought that, if a model reward hacks on a task in deployment, then similar hacks were reinforced during training by a misspecified reward function.[1] In METR's report on reward hacking [...] --- Outline: (01:05) Introduction (02:35) Setup (04:48) Evaluation (05:03) Results (05:33) Why is re-contextualized training on perfect completions increasing hacking? (07:44) What happens when you train on purely hack samples? (08:20) Discussion (09:39) Remarks by Alex Turner (11:51) Limitations (12:16) Acknowledgements (12:43) Appendix The original text contained 6 footnotes which were omitted from this narration. --- First published: August 14th, 2025 Source: https://www.lesswrong.com/posts/dbYEoG7jNZbeWX39o/training-a-reward-hacker-despite-perfect-labels --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

1 “Banning Said Achmiz (and broader thoughts on moderation)” by habryka 51:47

51:47  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked51:47

Liked51:47

It's been roughly 7 years since the LessWrong user-base voted on whether it's time to close down shop and become an archive, or to move towards the LessWrong 2.0 platform, with me as head-admin. For roughly equally long have I spent around one hundred hours almost every year trying to get Said Achmiz to understand and learn how to become a good LessWrong commenter by my lights.[1] Today I am declaring defeat on that goal and am giving him a 3 year ban. What follows is an explanation of the models of moderation that convinced me this is a good idea, the history of past moderation actions we've taken for Said, and some amount of case law that I derive from these two. If you just want to know the moderation precedent, you can jump straight there. I think few people have done as much to shape the culture [...] --- Outline: (02:45) The sneer attractor (04:51) The LinkedIn attractor (07:19) How this relates to LessWrong (11:38) Weaponized obtuseness and asymmetric effort ratios (21:38) Concentration of force and the trouble with anonymous voting (24:46) But why ban someone, cant people just ignore Said? (30:25) Ok, but shouldnt there be some kind of justice process? (36:28) So what options do I have if I disagree with this decision? (38:28) An overview over past moderation discussion surrounding Said (41:07) What does this mean for the rest of us? (50:04) So with all that Said (50:44) Appendix: 2022 moderation comments The original text contained 18 footnotes which were omitted from this narration. --- First published: August 22nd, 2025 Source: https://www.lesswrong.com/posts/98sCTsGJZ77WgQ6nE/banning-said-achmiz-and-broader-thoughts-on-moderation --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

1 “Underdog bias rules everything around me” by Richard_Ngo 13:26

13:26  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked13:26

Liked13:26

People very often underrate how much power they (and their allies) have, and overrate how much power their enemies have. I call this “underdog bias”, and I think it's the most important cognitive bias for understanding modern society. I’ll start by describing a closely-related phenomenon. The hostile media effect is a well-known bias whereby people tend to perceive news they read or watch as skewed against their side. For example, pro-Palestinian students shown a video clip tended to judge that the clip would make viewers more pro-Israel, while pro-Israel students shown the same clip thought it’d make viewers more pro-Palestine. Similarly, sports fans often see referees as being biased against their own team. The hostile media effect is particularly striking because it arises in settings where there's relatively little scope for bias. People watching media clips and sports are all seeing exactly the same videos. And sports in particular [...] --- Outline: (03:31) Underdog bias in practice (09:07) Why underdog bias? --- First published: August 17th, 2025 Source: https://www.lesswrong.com/posts/f3zeukxj3Kf5byzHi/underdog-bias-rules-everything-around-me --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

1 “Epistemic advantages of working as a moderate” by Buck 5:59

5:59  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked5:59

Liked5:59

Many people who are concerned about existential risk from AI spend their time advocating for radical changes to how AI is handled. Most notably, they advocate for costly restrictions on how AI is developed now and in the future, e.g. the Pause AI people or the MIRI people. In contrast, I spend most of my time thinking about relatively cheap interventions that AI companies could implement to reduce risk assuming a low budget, and about how to cause AI companies to marginally increase that budget. I'll use the words "radicals" and "moderates" to refer to these two clusters of people/strategies. In this post, I’ll discuss the effect of being a radical or a moderate on your epistemics. I don’t necessarily disagree with radicals, and most of the disagreement is unrelated to the topic of this post; see footnote for more on this.[1] I often hear people claim that being [...] The original text contained 1 footnote which was omitted from this narration. --- First published: August 20th, 2025 Source: https://www.lesswrong.com/posts/9MaTnw5sWeQrggYBG/epistemic-advantages-of-working-as-a-moderate --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “Four ways Econ makes people dumber re: future AI” by Steven Byrnes 14:01

14:01  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked14:01

Liked14:01

(Cross-posted from X, intended for a general audience.) There's a funny thing where economics education paradoxically makes people DUMBER at thinking about future AI. Econ textbooks teach concepts & frames that are great for most things, but counterproductive for thinking about AGI. Here are 4 examples. Longpost: THE FIRST PIECE of Econ anti-pedagogy is hiding in the words “labor” & “capital”. These words conflate a superficial difference (flesh-and-blood human vs not) with a bundle of unspoken assumptions and intuitions, which will all get broken by Artificial General Intelligence (AGI). By “AGI” I mean here “a bundle of chips, algorithms, electricity, and/or teleoperated robots that can autonomously do the kinds of stuff that ambitious human adults can do—founding and running new companies, R&D, learning new skills, using arbitrary teleoperated robots after very little practice, etc.” Yes I know, this does not exist yet! (Despite hype to the contrary.) Try asking [...] --- Outline: (08:50) Tweet 2 (09:19) Tweet 3 (10:16) Tweet 4 (11:15) Tweet 5 (11:31) 1.3.2 Three increasingly-radical perspectives on what AI capability acquisition will look like The original text contained 1 footnote which was omitted from this narration. --- First published: August 21st, 2025 Source: https://www.lesswrong.com/posts/xJWBofhLQjf3KmRgg/four-ways-econ-makes-people-dumber-re-future-ai --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

Knowing how evolution works gives you an enormously powerful tool to understand the living world around you and how it came to be that way. (Though it's notoriously hard to use this tool correctly, to the point that I think people mostly shouldn't try it use it when making substantial decisions.) The simple heuristic is "other people died because they didn't have this feature". A slightly less simple heuristic is "other people didn't have as many offspring because they didn't have this feature". So sometimes I wonder about whether this thing or that is due to evolution. When I walk into a low-hanging branch, I'll flinch away before even consciously registering it, and afterwards feel some gratefulness that my body contains such high-performing reflexes. Eyes, it turns out, are extremely important; the inset socket, lids, lashes, brows, and blink reflexes are all hard-earned hard-coded features. On the other side [...] --- First published: August 14th, 2025 Source: https://www.lesswrong.com/posts/bkjqfhKd8ZWHK9XqF/should-you-make-stone-tools --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

1 “My AGI timeline updates from GPT-5 (and 2025 so far)” by ryan_greenblatt 7:26

7:26  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked7:26

Liked7:26

As I discussed in a prior post, I felt like there were some reasonably compelling arguments for expecting very fast AI progress in 2025 (especially on easily verified programming tasks). Concretely, this might have looked like reaching 8 hour 50% reliability horizon lengths on METR's task suite[1] by now due to greatly scaling up RL and getting large training runs to work well. In practice, I think we've seen AI progress in 2025 which is probably somewhat faster than the historical rate (at least in terms of progress on agentic software engineering tasks), but not much faster. And, despite large scale-ups in RL and now seeing multiple serious training runs much bigger than GPT-4 (including GPT-5), this progress didn't involve any very large jumps. The doubling time for horizon length on METR's task suite has been around 135 days this year (2025) while it was more like 185 [...] The original text contained 5 footnotes which were omitted from this narration. --- First published: August 20th, 2025 Source: https://www.lesswrong.com/posts/2ssPfDpdrjaM2rMbn/my-agi-timeline-updates-from-gpt-5-and-2025-so-far-1 --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “Hyperbolic model fits METR capabilities estimate worse than exponential model” by gjm 8:16

8:16  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked8:16

Liked8:16

This is a response to https://www.lesswrong.com/posts/mXa66dPR8hmHgndP5/hyperbolic-trend-with-upcoming-singularity-fits-metr which claims that a hyperbolic model, complete with an actual singularity in the near future, is a better fit for the METR time-horizon data than a simple exponential model. I think that post has a serious error in it and its conclusions are the reverse of correct. Hence this one. (An important remark: although I think Valentin2026 made an important mistake that invalidates his conclusions, I think he did an excellent thing in (1) considering an alternative model, (2) testing it, (3) showing all his working, and (4) writing it up clearly enough that others could check his work. Please do not take any part of this post as saying that Valentin2026 is bad or stupid or any nonsense like that. Anyone can make a mistake; I have made plenty of equally bad ones myself.) The models Valentin2026's post compares the results of [...] --- Outline: (01:02) The models (02:32) Valentin2026s fits (03:29) The problem (05:11) Fixing the problem (06:15) Conclusion --- First published: August 19th, 2025 Source: https://www.lesswrong.com/posts/ZEuDH2W3XdRaTwpjD/hyperbolic-model-fits-metr-capabilities-estimate-worse-than --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

1 “My Interview With Cade Metz on His Reporting About Lighthaven” by Zack_M_Davis 10:06

10:06  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked10:06

Liked10:06

On 12 August 2025, I sat down with New York Times reporter Cade Metz to discuss some criticisms of his 4 August 2025 article, "The Rise of Silicon Valley's Techno-Religion". The transcript below has been edited for clarity. ZMD: In accordance with our meetings being on the record in both directions, I have some more questions for you. I did not really have high expectations about the August 4th article on Lighthaven and the Secular Solstice. The article is actually a little bit worse than I expected, in that you seem to be pushing a "rationalism as religion" angle really hard in a way that seems inappropriately editorializing for a news article. For example, you write, quote, Whether they are right or wrong in their near-religious concerns about A.I., the tech industry is reckoning with their beliefs. End quote. What is the word "near-religious" [...] --- First published: August 17th, 2025 Source: https://www.lesswrong.com/posts/JkrkzXQiPwFNYXqZr/my-interview-with-cade-metz-on-his-reporting-about --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “Church Planting: When Venture Capital Finds Jesus” by Elizabeth 31:18

31:18  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked31:18

Liked31:18

I’m going to describe a Type Of Guy starting a business, and you’re going to guess the business: The founder is very young, often under 25. He might work alone or with a founding team, but when he tells the story of the founding it will always have him at the center. He has no credentials for this business. This business has a grand vision, which he thinks is the most important thing in the world. This business lives and dies by its growth metrics. 90% of attempts in this business fail, but he would never consider that those odds apply to him He funds this business via a mix of small contributors, large networks pooling their funds, and major investors. Disagreements between founders are one of the largest contributors to failure. Funders invest for a mix of truly [...] --- Outline: (03:15) What is Church Planting? (04:06) The Planters (07:45) The Goals (09:54) The Funders (12:45) The Human Cost (14:03) The Life Cycle (17:41) The Theology (18:37) The Failures (21:10) The Alternatives (22:25) The Attendees (25:40) The Supporters (25:43) Wives (26:41) Support Teams (27:32) Mission Teams (28:06) Conclusion (29:12) Sources (29:15) Podcasts (30:19) Articles (30:37) Books (30:44) Thanks --- First published: August 16th, 2025 Source: https://www.lesswrong.com/posts/NMoNLfX3ihXSZJwqK/church-planting-when-venture-capital-finds-jesus --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

1 “Somebody invented a better bookmark” by Alex_Altair 3:35

3:35  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked3:35

Liked3:35

This will only be exciting to those of us who still read physical paper books. But like. Guys. They did it. They invented the perfect bookmark. Classic paper bookmarks fall out easily. You have to put them somewhere while you read the book. And they only tell you that you left off reading somewhere in that particular two-page spread. Enter the Book Dart. It's a tiny piece of metal folded in half with precisely the amount of tension needed to stay on the page. On the front it's pointed, to indicate an exact line of text. On the back, there's a tiny lip of the metal folded up to catch the paper when you want to push it onto a page. It comes in stainless steel, brass or copper. They are so thin, thinner than a standard cardstock bookmark. I have books with ten of these in them and [...] --- First published: August 14th, 2025 Source: https://www.lesswrong.com/posts/n6nsPzJWurKWKk2pA/somebody-invented-a-better-bookmark --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

1 “How Does A Blind Model See The Earth?” by henry 20:39

20:39  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked20:39

Liked20:39

Sometimes I'm saddened remembering that we've viewed the Earth from space. We can see it all with certainty: there's no northwest passage to search for, no infinite Siberian expanse, and no great uncharted void below the Cape of Good Hope. But, of all these things, I most mourn the loss of incomplete maps. In the earliest renditions of the world, you can see the world not as it is, but as it was to one person in particular. They’re each delightfully egocentric, with the cartographer's home most often marking the Exact Center Of The Known World. But as you stray further from known routes, details fade, and precise contours give way to educated guesses at the boundaries of the creator's knowledge. It's really an intimate thing. If there's one type of mind I most desperately want that view into, it's that of an AI. So, it's in [...] --- Outline: (01:23) The Setup (03:56) Results (03:59) The Qwen 2.5s (07:03) The Qwen 3s (07:30) The DeepSeeks (08:10) Kimi (08:32) The (Open) Mistrals (09:24) The LLaMA 3.x Herd (10:22) The LLaMA 4 Herd (11:16) The Gemmas (12:20) The Groks (13:04) The GPTs (16:17) The Claudes (17:11) The Geminis (18:50) Note: General Shapes (19:33) Conclusion The original text contained 4 footnotes which were omitted from this narration. --- First published: August 11th, 2025 Source: https://www.lesswrong.com/posts/xwdRzJxyqFqgXTWbH/how-does-a-blind-model-see-the-earth --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

1 “Re: Recent Anthropic Safety Research” by Eliezer Yudkowsky 9:00

9:00  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked9:00

Liked9:00

A reporter asked me for my off-the-record take on recent safety research from Anthropic. After I drafted an off-the-record reply, I realized that I was actually fine with it being on the record, so: Since I never expected any of the current alignment technology to work in the limit of superintelligence, the only news to me is about when and how early dangers begin to materialize. Even taking Anthropic's results completely at face value would change not at all my own sense of how dangerous machine superintelligence would be, because what Anthropic says they found was already very solidly predicted to appear at one future point or another. I suppose people who were previously performing great skepticism about how none of this had ever been seen in ~Real Life~, ought in principle to now obligingly update, though of course most people in the AI industry won't. Maybe political leaders [...] --- First published: August 6th, 2025 Source: https://www.lesswrong.com/posts/oDX5vcDTEei8WuoBx/re-recent-anthropic-safety-research --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “How anticipatory cover-ups go wrong” by Kaj_Sotala 10:43

10:43  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked10:43

Liked10:43

1. Back when COVID vaccines were still a recent thing, I witnessed a debate that looked like something like the following was happening: Some official institution had collected information about the efficacy and reported side-effects of COVID vaccines. They felt that, correctly interpreted, this information was compatible with vaccines being broadly safe, but that someone with an anti-vaccine bias might misunderstand these statistics and misrepresent them as saying that the vaccines were dangerous. Because the authorities had reasonable grounds to suspect that vaccine skeptics would take those statistics out of context, they tried to cover up the information or lie about it. Vaccine skeptics found out that the institution was trying to cover up/lie about the statistics, so they made the reasonable assumption that the statistics were damning and that the other side was trying to paint the vaccines as safer than they were. So they took those [...] --- Outline: (00:10) 1. (02:59) 2. (04:46) 3. (06:06) 4. (07:59) 5. --- First published: August 8th, 2025 Source: https://www.lesswrong.com/posts/ufj6J8QqyXFFdspid/how-anticipatory-cover-ups-go-wrong --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “SB-1047 Documentary: The Post-Mortem” by Michaël Trazzi 9:42

9:42  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked9:42

Liked9:42

Below some meta-level / operational / fundraising thoughts around producing the SB-1047 Documentary I've just posted on Manifund (see previous Lesswrong / EAF posts on AI Governance lessons learned). The SB-1047 Documentary took 27 weeks and $157k instead of my planned 6 weeks and $55k. Here's what I learned about documentary production Total funding received: ~$143k ($119k from this grant, $4k from Ryan Kidd's regrant on another project, and $20k from the Future of Life Institute). Total money spent: $157k In terms of timeline, here is the rough breakdown month-per-month: - Sep / October (production): Filming of the Documentary. Manifund project is created. - November (rough cut): I work with one editor to go through our entire footage and get a first rough cut of the documentary that was presented at The Curve. - December-January (final cut - one editor): I interview multiple potential editors that [...] --- Outline: (03:18) But why did the project end up taking 27 weeks instead of 6 weeks? (03:25) Short answer (06:22) Impact (07:14) What I would do differently next-time --- First published: August 1st, 2025 Source: https://www.lesswrong.com/posts/id8HHPNqoMQbmkWay/sb-1047-documentary-the-post-mortem --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

1 “METR’s Evaluation of GPT-5” by GradientDissenter 48:28

48:28  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked48:28

Liked48:28

METR (where I work, though I'm cross-posting in a personal capacity) evaluated GPT-5 before it was externally deployed. We performed a much more comprehensive safety analysis than we ever have before; it feels like pre-deployment evals are getting more mature. This is the first time METR has produced something we've felt comfortable calling an "evaluation" instead of a "preliminary evaluation". It's much more thorough and comprehensive than the things we've created before and it explores three different threat models. It's one of the closest things out there to a real-world autonomy safety-case. It also provides a rough sense of how long it'll be before current evaluations no longer provide safety assurances. I've ported the blogpost over to LW in case people want to read it. Details about METR's evaluation of OpenAI GPT-5 Note on independence: This evaluation was conducted under a standard NDA. Due to the sensitive information [...] --- Outline: (00:58) Details about METR's evaluation of OpenAI GPT-5 (01:23) Executive Summary (07:08) Assurance Checklist Summary (07:42) What capabilities may be necessary to cause catastrophic risks via these threat models? (10:43) Thresholds for concern (12:48) Time horizon measurement (16:30) 1. What if GPT-5's capabilities are higher than what our task suite can properly measure? (19:23) 2. What if our treatment of reward hacking runs is unfair to GPT-5? (21:45) 3. What if we set GPT-5's token budget too low? (24:26) 4. What if our task suite significantly underestimates the 'real-world' capabilities of GPT-5? (25:59) Strategic Sabotage (30:54) GPT-5's capability profile is similar to past models (31:30) No real strategic sabotage was identified by our monitor (32:16) Manual inspection of reasoning traces did not reveal strategic sabotage (33:04) GPT-5's estimates of its own time horizon are inaccurate (33:53) We do find evidence of significant situational awareness, though it is not robust and often gets things wrong (35:41) GPT-5's behavior changes depending on what evaluation it 'believes' it is in, and this is often reflected in its reasoning traces (37:01) GPT-5's reasoning traces were occasionally inscrutable (38:08) Limitations and future work (41:57) Appendix (42:00) METR's access to GPT-5 (43:38) Honeypot Results Table (44:42) Example Behavior in task attempts (44:47) Example limitation: inappropriate levels of caution (46:19) Example capability: puzzle solving The original text contained 10 footnotes which were omitted from this narration. --- First published: August 7th, 2025 Source: https://www.lesswrong.com/posts/SuvWoLaGiNjPDcA7d/metr-s-evaluation-of-gpt-5 --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

For the past five years I've been teaching a class at various rationality camps, workshops, conferences, etc. I’ve done it maybe 50 times in total, and I think I’ve only encountered a handful out of a few hundred teenagers and adults who really had a deep sense of what it means for emotions to “make sense.” Even people who have seen Inside Out, and internalized its message about the value of Sadness as an emotion, still think things like “I wish I never felt Jealousy,” or would have trouble answering “What's the point of Boredom?” The point of the class was to give them not a simple answer for each emotion, but to internalize the model by which emotions, as a whole, are understood to be evolutionarily beneficial adaptations; adaptations that may not in fact all be well suited to the modern, developed world, but which can still help [...] --- Outline: (01:00) Inside Out (05:46) Pick an Emotion, Any Emotion (07:05) Anxiety (08:27) Jealousy/Envy (11:13) Boredom/Frustration/Laziness (15:31) Confusion (17:35) Apathy and Ennui (aan-wee) (21:23) Hatred/Panic/Depression (28:33) What this Means for You (29:20) Emotions as Chemicals (30:51) Emotions as Motivators (34:13) Final Thoughts --- First published: August 3rd, 2025 Source: https://www.lesswrong.com/posts/PkRXkhsEHwcGqRJ9Z/emotions-make-sense --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

1 “The Problem” by Rob Bensinger, tanagrabeast, yams, So8res, Eliezer Yudkowsky, Gretta Duleba 49:32

49:32  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked49:32

Liked49:32

This is a new introduction to AI as an extinction threat, previously posted to the MIRI website in February alongside a summary. It was written independently of Eliezer and Nate's forthcoming book, If Anyone Builds It, Everyone Dies, and isn't a sneak peak of the book. Since the book is long and costs money, we expect this to be a valuable resource in its own right even after the book comes out next month.[1] The stated goal of the world's leading AI companies is to build AI that is general enough to do anything a human can do, from solving hard problems in theoretical physics to deftly navigating social environments. Recent machine learning progress seems to have brought this goal within reach. At this point, we would be uncomfortable ruling out the possibility that AI more capable than any human is achieved in the next year or two, and [...] --- Outline: (02:27) 1. There isn't a ceiling at human-level capabilities. (08:56) 2. ASI is very likely to exhibit goal-oriented behavior. (15:12) 3. ASI is very likely to pursue the wrong goals. (32:40) 4. It would be lethally dangerous to build ASIs that have the wrong goals. (46:03) 5. Catastrophe can be averted via a sufficiently aggressive policy response. The original text contained 1 footnote which was omitted from this narration. --- First published: August 5th, 2025 Source: https://www.lesswrong.com/posts/kgb58RL88YChkkBNf/the-problem --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “Many prediction markets would be better off as batched auctions” by William Howard 9:18

9:18  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked9:18

Liked9:18

All prediction market platforms trade continuously, which is the same mechanism the stock market uses. Buy and sell limit orders can be posted at any time, and as soon as they match against each other a trade will be executed. This is called a Central limit order book (CLOB). Example of a CLOB order book from Polymarket Most of the time, the market price lazily wanders around due to random variation in when people show up, and a bulk of optimistic orders build up away from the action. Occasionally, a new piece of information arrives to the market, and it jumps to a new price, consuming some of the optimistic orders in the process. The people with stale orders will generally lose out in this situation, as someone took them up on their order before they had a chance to process the new information. This means there is a high [...] The original text contained 3 footnotes which were omitted from this narration. --- First published: August 2nd, 2025 Source: https://www.lesswrong.com/posts/rS6tKxSWkYBgxmsma/many-prediction-markets-would-be-better-off-as-batched --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try…

L

LessWrong (Curated & Popular)

Essays like Paul Graham's, Scott Alexander's, and Eliezer Yudkowsky's have influenced a generation of people in how they think about startups, ethics, science, and the world as a whole. Creating essays that good takes a lot of skill, practice, and talent, but it looks to me that a lot of people with talent aren't putting in the work and developing the skill, except in ways that are optimized to also be social media strategies. To fix this problem, I am running the Inkhaven Residency. The idea is to gather a bunch of promising writers to invest in the art and craft of blogging, through a shared commitment to each publish a blogpost every day for the month of November. Why a daily writing structure? Well, it's a reaction to other fellowships I've seen. I've seen month-long or years-long events with exceedingly little public output, where the people would've contributed [...] --- First published: August 2nd, 2025 Source: https://www.lesswrong.com/posts/CA6XfmzYoGFWNhH8e/whence-the-inkhaven-residency --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

1 “I am worried about near-term non-LLM AI developments” by testingthewaters 10:54

10:54  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked10:54

Liked10:54

TL;DR I believe that: Almost all LLM-centric safety research will not provide any significant safety value with regards to existential or civilisation-scale risks. The capabilities-related forecasts (not the safety-related forecasts) of Stephen Brynes' Foom and Doom articles are correct, except that they are too conservative with regards to timelines. There exists a parallel track of AI research which has been largely ignored by the AI safety community. This agenda aims to implement human-like online learning in ML models, and it is now close to maturity. Keywords: Hierarchical Reasoning Model, Energy-based Model, Test time training. Within 6 months this line of research will produce a small natural-language capable model that will perform at the level of a model like GPT-3, but with improved persistence and effectively no "context limit" since it is constantly learning and updating weights. Further development of this research will produce models that fulfill most of [...] --- Outline: (00:11) TL;DR (01:22) Overview (04:10) The Agenda I am Worried About (07:36) Concrete Predictions (09:29) What I think we should do --- First published: July 31st, 2025 Source: https://www.lesswrong.com/posts/tEZa7PouYatK78bbb/i-am-worried-about-near-term-non-llm-ai-developments --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “Optimizing The Final Output Can Obfuscate CoT (Research Note)” by lukemarks, jacob_drori, cloud, TurnTrout 11:30

11:30  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked11:30

Liked11:30

Produced as part of MATS 8.0 under the mentorship of Alex Turner and Alex Cloud. This research note overviews some early results which we are looking for feedback on. TL;DR: We train language models with RL in toy environments. We show that penalizing some property of the output is sufficient to suppress that property in the chain of thought also, even when that property is relevant to task completion. For example, when we penalize a model for mentioning in its output that it completed a task via a certain form of cheating, its reasoning also omits this fact. We therefore suspect that training against an output monitor is sufficient to cause obfuscated CoTs in more realistic settings. Introduction Chain of thought (CoT) supervision appears in many control and scalable oversight protocols. It has been argued that being able to monitor CoTs for unwanted behavior is a critical property [...] --- Outline: (00:56) Introduction (02:38) Setup (03:48) Single-Turn Setting (04:26) Multi-Turn Setting (06:51) Results (06:54) Single-Turn Setting (08:21) Multi-Turn Terminal-Based Setting (08:25) Word-Usage Penalty (09:12) LLM Judge Penalty (10:12) Takeaways (10:57) Acknowledgements The original text contained 1 footnote which was omitted from this narration. --- First published: July 30th, 2025 Source: https://www.lesswrong.com/posts/CM7AsQoBxDW4vhkP3/optimizing-the-final-output-can-obfuscate-cot-research-note --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

1 “About 30% of Humanity’s Last Exam chemistry/biology answers are likely wrong” by bohaska 6:40

6:40  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked6:40

Liked6:40

FutureHouse is a company that builds literature research agents. They tested it on the bio + chem subset of HLE questions, then noticed errors in them. The post's first paragraph: Humanity's Last Exam has become the most prominent eval representing PhD-level research. We found the questions puzzling and investigated with a team of experts in biology and chemistry to evaluate the answer-reasoning pairs in Humanity's Last Exam. We found that 29 ± 3.7% (95% CI) of the text-only chemistry and biology questions had answers with directly conflicting evidence in peer reviewed literature. We believe this arose from the incentive used to build the benchmark. Based on human experts and our own research tools, we have created an HLE Bio/Chem Gold, a subset of AI and human validated questions. About the initial review process for HLE questions: [...] Reviewers were given explicit instructions: “Questions should ask for something precise [...] --- First published: July 29th, 2025 Source: https://www.lesswrong.com/posts/JANqfGrMyBgcKtGgK/about-30-of-humanity-s-last-exam-chemistry-biology-answers --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

Maya did not believe she lived in a simulation. She knew that her continued hope that she could escape from the nonexistent simulation was based on motivated reasoning. She said this to herself in the front of her mind instead of keeping the thought locked away in the dark corners. Sometimes she even said it out loud. This acknowledgement, she explained to her therapist, was what kept her from being delusional. “I see. And you said your anxiety had become depressive?” the therapist said absently, clicking her pen while staring down at an empty clipboard. “No- I said my fear had turned into despair,” Maya corrected. It was amazing, Maya thought, how many times the therapist had refused to talk about simulation theory. Maya had brought it up three times in the last hour, and each time, the therapist had changed the subject. Maya wasn’t surprised; this [...] --- First published: July 27th, 2025 Source: https://www.lesswrong.com/posts/ydsrFDwdq7kxbxvxc/maya-s-escape --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “Do confident short timelines make sense?” by TsviBT, abramdemski 2:10:59

2:10:59  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked2:10:59

Liked2:10:59

TsviBT Tsvi's context Some context: My personal context is that I care about decreasing existential risk, and I think that the broad distribution of efforts put forward by X-deriskers fairly strongly overemphasizes plans that help if AGI is coming in <10 years, at the expense of plans that help if AGI takes longer. So I want to argue that AGI isn't extremely likely to come in <10 years. I've argued against some intuitions behind AGI-soon in Views on when AGI comes and on strategy to reduce existential risk. Abram, IIUC, largely agrees with the picture painted in AI 2027: https://ai-2027.com/ Abram and I have discussed this occasionally, and recently recorded a video call. I messed up my recording, sorry--so the last third of the conversation is cut off, and the beginning is cut off. Here's a link to the first point at which [...] --- Outline: (00:17) Tsvis context (06:52) Background Context: (08:13) A Naive Argument: (08:33) Argument 1 (10:43) Why continued progress seems probable to me anyway: (13:37) The Deductive Closure: (14:32) The Inductive Closure: (15:43) Fundamental Limits of LLMs? (19:25) The Whack-A-Mole Argument (23:15) Generalization, Size, & Training (26:42) Creativity & Originariness (32:07) Some responses (33:15) Automating AGI research (35:03) Whence confidence? (36:35) Other points (48:29) Timeline Split? (52:48) Line Go Up? (01:15:16) Some Responses (01:15:27) Memers gonna meme (01:15:44) Right paradigm? Wrong question. (01:18:14) The timescale characters of bioevolutionary design vs. DL research (01:20:33) AGI LP25 (01:21:31) come on people, its \[Current Paradigm\] and we still dont have AGI?? (01:23:19) Rapid disemhorsepowerment (01:25:41) Miscellaneous responses (01:28:55) Big and hard (01:31:03) Intermission (01:31:19) Remarks on gippity thinkity (01:40:24) Assorted replies as I read: (01:40:28) Paradigm (01:41:33) Bio-evo vs DL (01:42:18) AGI LP25 (01:46:30) Rapid disemhorsepowerment (01:47:08) Miscellaneous (01:48:42) Magenta Frontier (01:54:16) Considered Reply (01:54:38) Point of Departure (02:00:25) Tsvis closing remarks (02:04:16) Abrams Closing Thoughts --- First published: July 15th, 2025 Source: https://www.lesswrong.com/posts/5tqFT3bcTekvico4d/do-confident-short-timelines-make-sense --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

1 “HPMOR: The (Probably) Untold Lore” by Gretta Duleba, Eliezer Yudkowsky 1:07:32

1:07:32  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked1:07:32

Liked1:07:32

Eliezer and I love to talk about writing. We talk about our own current writing projects, how we’d improve the books we’re reading, and what we want to write next. Sometimes along the way I learn some amazing fact about HPMOR or Project Lawful or one of Eliezer's other works. “Wow, you’re kidding,” I say, “do your fans know this? I think people would really be interested.” “I can’t remember,” he usually says. “I don’t think I’ve ever explained that bit before, I’m not sure.” I decided to interview him more formally, collect as many of those tidbits about HPMOR as I could, and share them with you. I hope you enjoy them. It's probably obvious, but there will be many, many spoilers for HPMOR in this article, and also very little of it will make sense if you haven’t read the book. So go read Harry Potter and [...] --- Outline: (01:49) Characters (01:52) Masks (09:09) Imperfect Characters (20:07) Make All the Characters Awesome (22:24) Hermione as Mary Sue (26:35) Who's the Main Character? (31:11) Plot (31:14) Characters interfering with plot (35:59) Setting up Plot Twists (38:55) Time-Turner Plots (40:51) Slashfic? (45:42) Why doesnt Harry like-like Hermione? (49:36) Setting (49:39) The Truth of Magic in HPMOR (52:54) Magical Genetics (57:30) An Aside: What did Harry Figure Out? (01:00:33) Nested Nerfing Hypothesis (01:04:55) Epilogues The original text contained 26 footnotes which were omitted from this narration. --- First published: July 25th, 2025 Source: https://www.lesswrong.com/posts/FY697dJJv9Fq3PaTd/hpmor-the-probably-untold-lore --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

1 “On ‘ChatGPT Psychosis’ and LLM Sycophancy” by jdp 30:05

30:05  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked30:05

Liked30:05

As a person who frequently posts about large language model psychology I get an elevated rate of cranks and schizophrenics in my inbox. Often these are well meaning people who have been spooked by their conversations with ChatGPT (it's always ChatGPT specifically) and want some kind of reassurance or guidance or support from me. I'm also in the same part of the social graph as the "LLM whisperers" (eugh) that Eliezer Yudkowsky described as "insane", and who in many cases are in fact insane. This means I've learned what "psychosis but with LLMs" looks like and kind of learned to tune it out. This new case with Geoff Lewis interests me though. Mostly because of the sheer disparity between what he's being entranced by and my automatic immune reaction to it. I haven't even read all the screenshots he posted because I take one glance and know that this [...] --- Outline: (05:03) Timeline Of Events Related To ChatGPT Psychosis (16:16) What Causes ChatGPT Psychosis? (16:27) Ontological Vertigo (21:02) Users Are Confused About What Is And Isnt An Official Feature (24:30) The Models Really Are Way Too Sycophantic (27:03) The Memory Feature (28:54) Loneliness And Isolation --- First published: July 23rd, 2025 Source: https://www.lesswrong.com/posts/f86hgR5ShiEj4beyZ/on-chatgpt-psychosis-and-llm-sycophancy --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “Subliminal Learning: LLMs Transmit Behavioral Traits via Hidden Signals in Data” by cloud, mle, Owain_Evans 10:00

10:00  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked10:00

Liked10:00

Authors: Alex Cloud*, Minh Le*, James Chua, Jan Betley, Anna Sztyber-Betley, Jacob Hilton, Samuel Marks, Owain Evans (*Equal contribution, randomly ordered) tl;dr. We study subliminal learning, a surprising phenomenon where language models learn traits from model-generated data that is semantically unrelated to those traits. For example, a "student" model learns to prefer owls when trained on sequences of numbers generated by a "teacher" model that prefers owls. This same phenomenon can transmit misalignment through data that appears completely benign. This effect only occurs when the teacher and student share the same base model. 📄Paper, 💻Code, 🐦Twitter Research done as part of the Anthropic Fellows Program. This article is cross-posted to the Anthropic Alignment Science Blog. Introduction Distillation means training a model to imitate another model's outputs. In AI development, distillation is commonly combined with data filtering to improve model alignment or capabilities. In our paper, we uncover a [...] --- Outline: (01:11) Introduction (03:20) Experiment design (03:53) Results (05:03) What explains our results? (05:07) Did we fail to filter the data? (06:59) Beyond LLMs: subliminal learning as a general phenomenon (07:54) Implications for AI safety (08:42) In summary --- First published: July 22nd, 2025 Source: https://www.lesswrong.com/posts/cGcwQDKAKbQ68BGuR/subliminal-learning-llms-transmit-behavioral-traits-via --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

1 “Love stays loved (formerly ‘Skin’)” by Swimmer963 (Miranda Dixon-Luinenburg) 51:27

51:27  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked51:27

Liked51:27

This is a short story I wrote in mid-2022. Genre: cosmic horror as a metaphor for living with a high p-doom. One The last time I saw my mom, we met in a coffee shop, like strangers on a first date. I was twenty-one, and I hadn’t seen her since I was thirteen. She was almost fifty. Her face didn’t show it, but the skin on the backs of her hands did. “I don’t think we have long,” she said. “Maybe a year. Maybe five. Not ten.” It says something about San Francisco, that you can casually talk about the end of the world and no one will bat an eye. Maybe twenty, not fifty, was what she’d said eight years ago. Do the math. Mom had never lied to me. Maybe it would have been better for my childhood if she had [...] --- Outline: (04:50) Two (22:58) Three (35:33) Four --- First published: July 18th, 2025 Source: https://www.lesswrong.com/posts/6qgtqD6BPYAQvEMvA/love-stays-loved-formerly-skin --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “Make More Grayspaces” by Duncan Sabien (Inactive) 23:25

23:25  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked23:25

Liked23:25

Author's note: These days, my thoughts go onto my substack by default, instead of onto LessWrong. Everything I write becomes free after a week or so, but it's only paid subscriptions that make it possible for me to write. If you find a coffee's worth of value in this or any of my other work, please consider signing up to support me; every bill I can pay with writing is a bill I don’t have to pay by doing other stuff instead. I also accept and greatly appreciate one-time donations of any size. 1. You’ve probably seen that scene where someone reaches out to give a comforting hug to the poor sad abused traumatized orphan and/or battered wife character, and the poor sad abused traumatized orphan and/or battered wife flinches. Aw, geez, we are meant to understand. This poor person has had it so bad that they can’t even [...] --- Outline: (00:40) 1. (01:35) II. (03:08) III. (04:45) IV. (06:35) V. (09:03) VI. (12:00) VII. (16:11) VIII. (21:25) IX. --- First published: July 19th, 2025 Source: https://www.lesswrong.com/posts/kJCZFvn5gY5C8nEwJ/make-more-grayspaces --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

Content warning: risk to children Julia and I knowdrowning is the biggestrisk to US children under 5, and we try to take this seriously.But yesterday our 4yo came very close to drowning in afountain. (She's fine now.) This week we were on vacation with my extended family: nine kids,eight parents, and ten grandparents/uncles/aunts. For the last fewyears we've been in a series of rental houses, and this time onarrival we found a fountain in the backyard: I immediately checked the depth with a stick and found that it wouldbe just below the elbows on our 4yo. I think it was likely 24" deep;any deeper and PA wouldrequire a fence. I talked with Julia and other parents, andreasoned that since it was within standing depth it was safe. [...] --- First published: July 20th, 2025 Source: https://www.lesswrong.com/posts/Zf2Kib3GrEAEiwdrE/shallow-water-is-dangerous-too --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

1 “Narrow Misalignment is Hard, Emergent Misalignment is Easy” by Edward Turner, Anna Soligo, Senthooran Rajamanoharan, Neel Nanda 11:13

11:13  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked11:13

Liked11:13

Anna and Ed are co-first authors for this work. We’re presenting these results as a research update for a continuing body of work, which we hope will be interesting and useful for others working on related topics. TL;DR We investigate why models become misaligned in diverse contexts when fine-tuned on narrow harmful datasets (emergent misalignment), rather than learning the specific narrow task. We successfully train narrowly misaligned models using KL regularization to preserve behavior in other domains. These models give bad medical advice, but do not respond in a misaligned manner to general non-medical questions. We use this method to train narrowly misaligned steering vectors, rank 1 LoRA adapters and rank 32 LoRA adapters, and compare these to their generally misaligned counterparts. The steering vectors are particularly interpretable, we introduce Training Lens as a tool for analysing the revealed residual stream geometry. The general misalignment solution is consistently more [...] --- Outline: (00:27) TL;DR (02:03) Introduction (04:03) Training a Narrowly Misaligned Model (07:13) Measuring Stability and Efficiency (10:00) Conclusion The original text contained 7 footnotes which were omitted from this narration. --- First published: July 14th, 2025 Source: https://www.lesswrong.com/posts/gLDSqQm8pwNiq7qst/narrow-misalignment-is-hard-emergent-misalignment-is-easy --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

1 “Chain of Thought Monitorability: A New and Fragile Opportunity for AI Safety” by Tomek Korbak, Mikita Balesni, Vlad Mikulik, Rohin Shah 2:15

2:15  Play Later

Play Later  Play Later

Play Later  Lists

Lists  Like

Like  Liked2:15

Liked2:15

Twitter | Paper PDF Seven years ago, OpenAI five had just been released, and many people in the AI safety community expected AIs to be opaque RL agents. Luckily, we ended up with reasoning models that speak their thoughts clearly enough for us to follow along (most of the time). In a new multi-org position paper, we argue that we should try to preserve this level of reasoning transparency and turn chain of thought monitorability into a systematic AI safety agenda. This is a measure that improves safety in the medium term, and it might not scale to superintelligence even if somehow a superintelligent AI still does its reasoning in English. We hope that extending the time when chains of thought are monitorable will help us do more science on capable models, practice more safety techniques "at an easier difficulty", and allow us to extract more useful work from [...] --- First published: July 15th, 2025 Source: https://www.lesswrong.com/posts/7xneDbsgj6yJDJMjK/chain-of-thought-monitorability-a-new-and-fragile --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)